Whilst trying to check in for a flight recently, I kept receiving an error message that my passport credentials could not be accepted. I re-entered the information many times, had others review the information I submitted and even called customer support. All to no avail. I had to check in at the counter once I arrived at the airport. There was no other way around it, meaning I needed to arrive earlier.

After my trip was completed, I received an email asking me to rate how likely I’d be to recommend the airline to a friend or colleague — the well-known Net Promoter Score (NPS) question. I gave it a rating of 5, categorising me as a detractor.

Now to those who get that data and review it, what will they think lower scores are based on? How will the team abstract actual insights from all their customers’ different experiences whilst flying with their airline? How will they be able to distil what part of the overall journey had key pain points? Was it due to check-in issues online or was it a delayed flight?

NPS can be a valuable measuring stick. But to make it valuable, a gap needs to be bridged between the higher level, north star metric and actionable insights.

NPS gaps

NPS and other post-experience measurements are deployed when a journey is complete. However, this limits feedback to only those who completed that journey.

For example, a car insurance company may deploy NPS after the completion of the online application journey. What is missing are those who tried to complete the application but were unable to do so and left the website altogether.

This could be solved by sending an email to those who dropped out and ask “Why did you not complete the online application?” However, the answer is still dependent upon those who want to reply and answer the questions. This may skew the results based on the participants’ decisions to provide feedback. Therefore, we could still be missing out on important information.

Another gap is that an NPS score tells you what is wrong, but not why. This is often the case with high-level analytics data. It provides a score that can be tracked over time and signals if things are trending. NPS doesn’t provide a reason for the score. Therefore, companies can’t understand how to improve the experience.

Filling the gaps with UX measuring tools

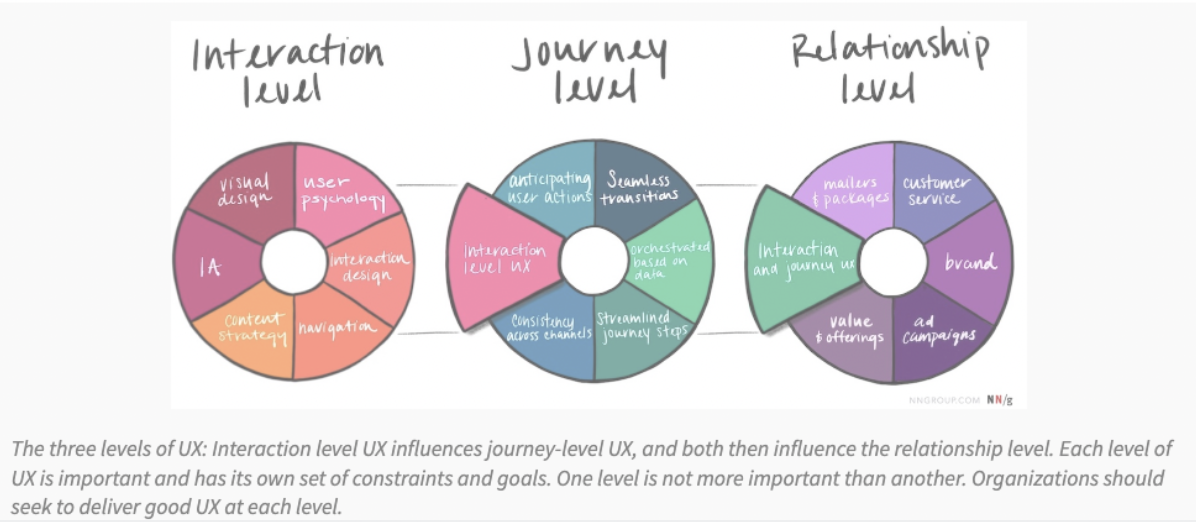

The relationship between CX and UX (user experience) can be understood at different levels of interactions between user and company. The broader customer experience, often measured by CSAT or NPS, understands the relationship between the user and the company. Whereas, UX helps us better understand the specific journey and interaction levels.

Since NPS captures high-level relationship data, we can focus on more specific elements that make up the larger relationship. This includes key user journeys – filling in the gaps left from NPS. We can also be more proactive in this approach as well. Measure designs and pre-production environments rather than just live experiences.

Getting started – longitudinal measurement

We recommend to regularly test the live UX experience to identify insights that can be acted on or researched further. This will measure its performance over time.

To measure and test live experiences over time, or measure against competitors, we need to determine what user journeys to focus on. Asking the following question might help you set your priorities:

What are the key journeys or jobs to be done that are crucial for our users and ultimately impact key business metrics?

For airlines, it’s likely completing the booking process or editing an existing flight. It impacts revenue and reduces calls to customer support. For supermarkets, it may be finding ingredient information for a product. This impacts revenue and cost reduction by reducing returns. Identifying those key journeys is step one.

Next, we need to identify the measurement tool. There are many different UX measures available. This includes the System Usability Scale (SUS) or the Standardised User Experience Percentile Rank Questionnaire (SUPR-Q). Many of these tools focus on attitudinal components but might miss out on users’ actual behaviour.

However, the important part of bridging the gaps of NPS is providing actionable insights. Whatever metric you choose, it’s a hook. It is a score that gets people engaged and asking for more. Therefore, select one UX measure to monitor experiences over time. If you carefully track the customer’s journey over time, you will be able to suggest improvements timely.

Final thoughts

A key component of filling the gap of NPS is to ensure research is embedded in the “improvement” process. As we decide what areas to focus on before going into solutions, we need to ensure we understand users’ problems before we begin solving them. In this way, your team is taking a proactive approach to solving customer problems.

In the end, NPS is not going away anytime soon and it is a measure that many organisations use and executives value and understand. Measuring and tracking the UX of key users’ journeys on digital properties can help bridge the gaps that NPS inherently creates.

This article was co-authored by UserZoom’s research partner, Sara Logel, and VP of UX research services at UserZoom, Lee Duddell.