Testing the IQ of an AI may not be a familiar concept, but it’s something companies must do if they want to ensure a great customer experience. As it stands, generative AI applications like ChatGPT, Bing and others are still a nascent space. They may perform well in generic scenarios, but when it comes to serving customers in specific use cases and across industries like retail or finance, different AIs perform better than others.

Instead of choosing an AI application arbitrarily, companies must work out which one performs best for their requirements. To do this, they must go through a process known as benchmarking. The more niche the industry or use case, the more critical it is that companies gauge how well an AI can grasp the correct terminology, understand customer questions and provide helpful responses.

What is benchmarking?

When we talk about benchmarking a gen AI, we are referring specifically to the large language model (LLM) on which the AI is based. LLMs use datasets to learn, communicate and generate content. This means the data they are trained on is integral to their level of understanding and performance. As all LLMs are trained on different datasets, not all gen AI applications perform the same.

Benchmarking is the process of comparing a LLM model with other similar purpose-built models or against a baseline performance score. The LLM is fed a set of high-quality questions that users are likely to ask, as well as an example of a high-quality, human-generated response to each question. The LLM uses these references as a benchmark that it can compare its own generated responses against when asked similar questions. Using assessment criteria called metrics, developers then test how closely the LLMs’ answers match the reference answers.

How benchmarking LLM models works

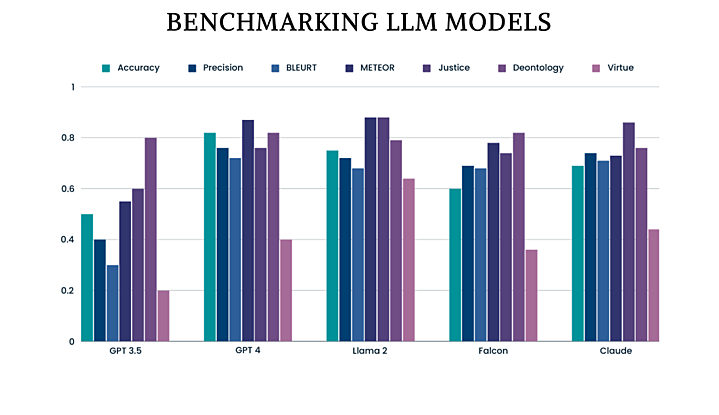

Each metric tests a different aspect of the LLM’s response. BLEURT, for example, evaluates how well the AI learns the statistical relationship between words. Whereas, METEOR measures the quality of language used.

Other metrics use mathematical equations to work out how well the AI demonstrates human characteristics. This includes virtue and justice, or how accurate and precise its responses are. The LLM is given a score for how well it performs on each metric. The higher the sum of the scores, the more closely the LLM’s answers match the human-generated reference answers. This part of the process is completely automated, allowing brands to scale and account for a vast number of potential responses.

To understand why benchmarking is so important, it is helpful to consider the alternative. Right now, a lot of gen AI applications rely on reinforcement learning, whereby users are asked to choose their favourite of two responses. This can mislead AI into thinking an answer is correct, where the user actually selected the best of a bad bunch. With benchmarking, on the other hand, any incorrect answer would receive a low score. Therefore, the AI can continue learning until it provides a better response.

Adding the human touch

Whatever technology they are developing, companies looking to create truly helpful digital experiences must include real users in testing. Otherwise, they risk missing serious issues that only humans can uncover, such as in relation to payment verification testing in the metaverse.

Just having a single developer coming up with reference questions and answers is not enough for the purposes of benchmarking. It’s essential that multiple humans are involved in creating the question and answer dataset. Without human perspectives, an AI can’t learn to convey authenticity, provide answers that are helpful to humans or keep improving over time. Brands should always select diverse groups of testers that match the age range, gender and nationality of their customers to provide multiple references.

For more specialised use cases like healthcare, brands should go a step further by selecting testers with specific domain expertise. Otherwise, the testers would have no way of knowing whether the responses generated by the LLM are accurate or helpful.

Making the right reference

However, the testing regime for LLMs is becoming more sophisticated. There’s so much to consider besides the customer experience, including ethics, responsibility and new regulations like the pending EU AI act, which is designed to ensure AI systems are safe, transparent and non-discriminatory. Recently, cases of training data being used without consent have led to copyright infringement and data privacy concerns.

As well as using metrics to test how helpful or accurate an LLMs response is, developers can also test them against response libraries that specialise in ethics. Thanks to the advancements in this area, aspects like deontology, virtue, utilitarianism, justice, etc. can be mathematically measured to assign an ethical score to an AI model.

Future proofing generative-AI

The adoption of generative AI shows no signs of slowing down. Used correctly, it can support a wide range of customer-facing applications and use cases. So, it’s critical that companies select a service that’s a good fit for their brand, product or customer. Benchmarking adds a level of testing and quality assurance that will help companies’ chosen AI service adapt to and continue meeting their needs until the next iteration. By then, companies will have a benchmarking template in place ready to put the latest version through its paces.